10-05-2025 13:00

via theguardian.com

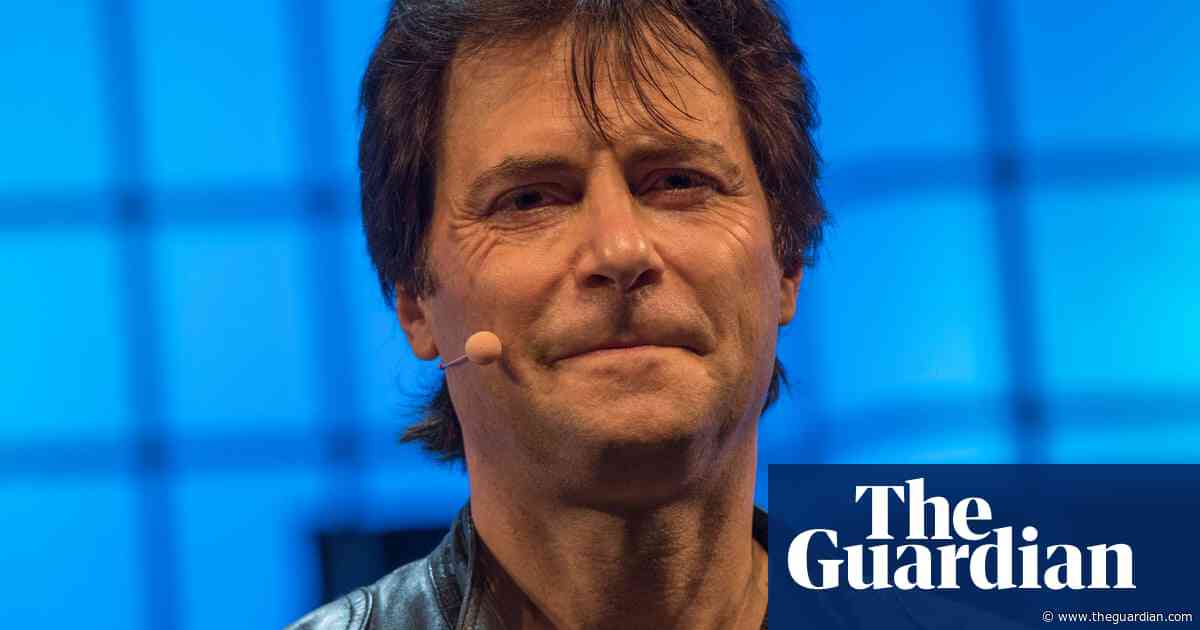

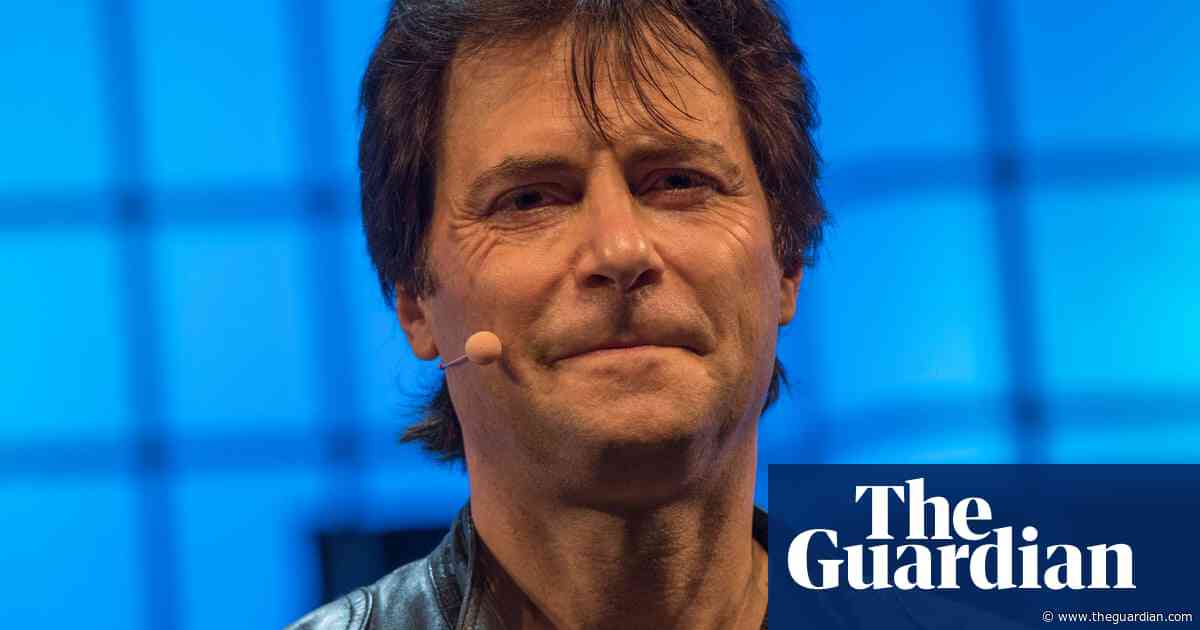

AI firms warned to calculate threat of super intelligence or risk it escaping human control

AI safety campaigner calls for existential threat assessment akin to Oppenheimer’s calculations before first nuclear testArtificial intelligence companies have been urged to replicate the safety calculations that underpinned Robert Oppenheimer’s first nuclear test before they release all-powerful systems.

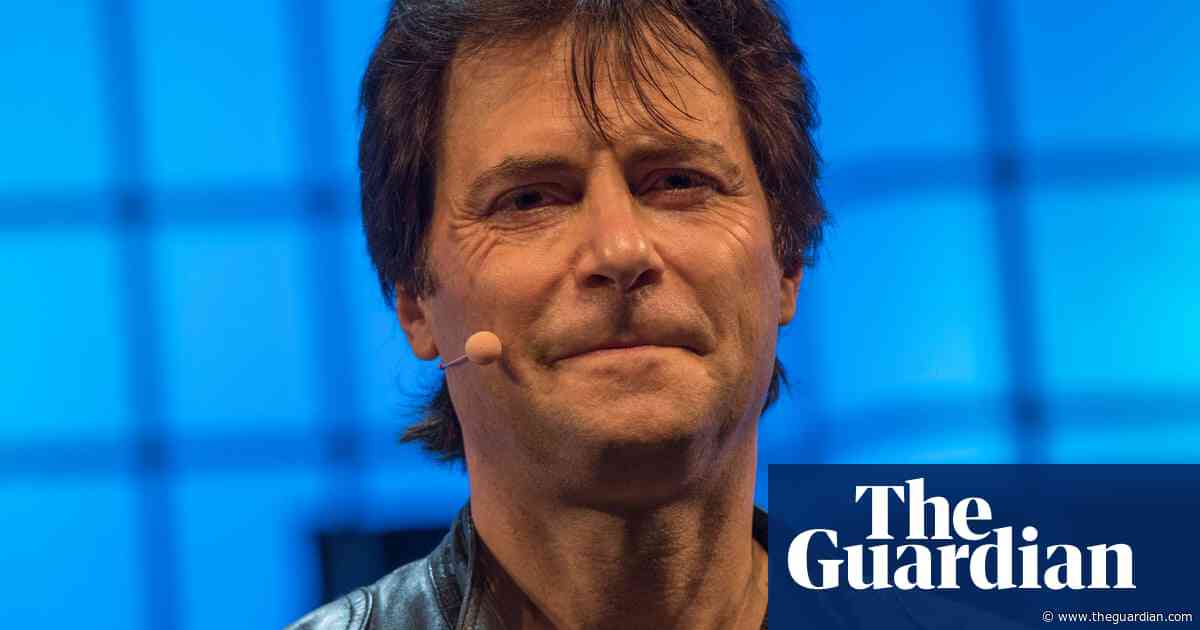

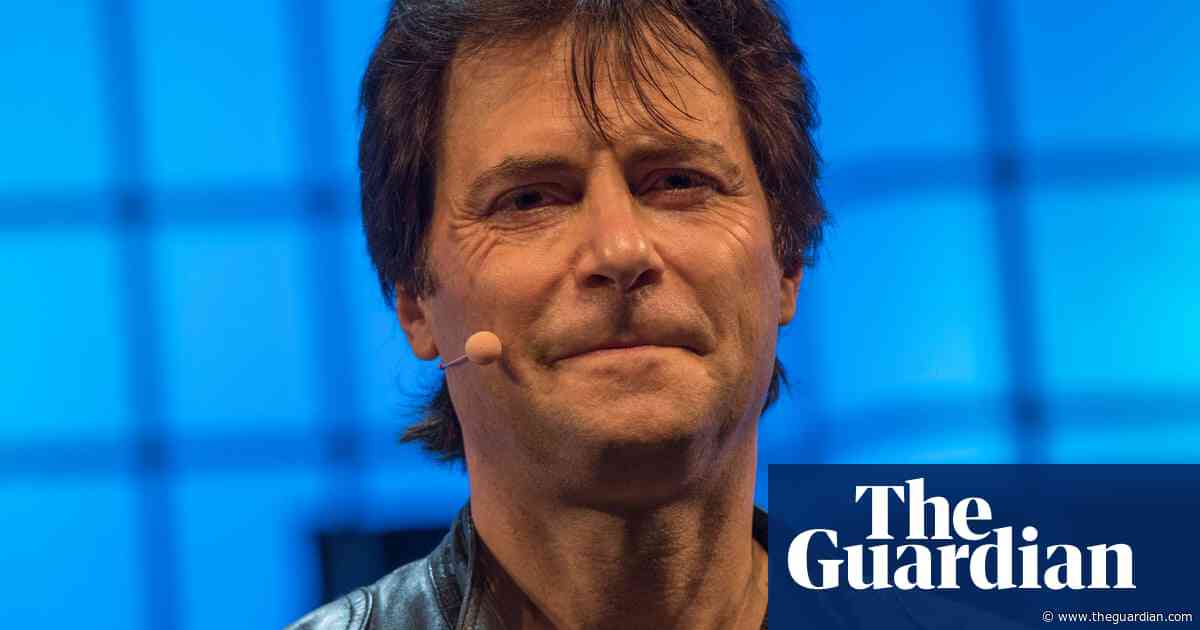

Max Tegmark, a leading voice in AI safety, said he had carried out calculations akin to those of the US physicist Arthur Compton before the Trinity test and had found a 90% probabil

Read more »

Max Tegmark, a leading voice in AI safety, said he had carried out calculations akin to those of the US physicist Arthur Compton before the Trinity test and had found a 90% probabil

Financial news